Pathways Language Model 2 (PaLM 2) is a large language model (LLM) developed by Google. It was announced in May 2023 as a successor to the PaLM model, which was initially released in January 2022. The PaLM 2 model is trained on a massive data set of text and code and can perform a wide range of tasks, such as word completion, code completion, question answering, summarization, and sentiment analysis.

PaLM 2 is a foundation model that is core to Google’s platform for generative AI. PaLM 2 powers Bard, Google’s AI chatbot that competes with OpenAI’s ChatGPT, and Duet AI, Google’s AI assistant framework that is being integrated into products such as Google Workspace and Google Cloud.

Google is making the PaLM API available within the Vertex AI platform and as a standalone API endpoint. Developers can access the Vertex AI PaLM API today, while the standalone endpoint is available only through the Trusted Testers program.

Up and running with Python and Vertex AI

Assuming you are a subscriber to Google Cloud, this tutorial walks you through the steps of exploring the PaLM API available in the Vertex AI platform. Please note that the service is in preview, and the API may change in the future.

Let’s start by creating a Python virtual environment for our experiment.

python3 -m venv venv source venv/bin/activate

Then we install the Python module to access the Google Vertex AI platform.

pip install google-cloud-aiplatform

We will also install Jupyter Notebook, which we’ll use as our development environment.

pip install jupyter

The library, vertexai.preview.language_models, has multiple classes including ChatModel, TextEmbedding, and TextGenerationModel. For this tutorial, we will focus on the TextGenerationModel, where PaLM 2 will help us generate a blog post based on the input prompt.

As a first step, import the right class from the module.

Import vertexai.preview.language_models import TextGenerationModel

We will then initialize the object based on the pre-trained model, text-bison@001, which is optimized for generating text.

model = TextGenerationModel.from_pretrained("text-bison@001")

The next step is to define a function that accepts the prompt as an input and returns the response generated by the model as output.

def get_completion(prompt_text): response = model.predict( prompt_text, max_output_tokens=1000, temperature=0.3 ) return response.text

IDG

IDGThe method, predict, accepts the prompt, the number of tokens, and temperature as parameters. Let’s understand these parameters better.

Text generation model parameters

While we submit prompts to the PaLM API in the form of text strings, the API converts these strings into chunks of meaning called tokens. A token is approximately four characters. 100 tokens correspond to roughly 60 to 80 words. If we want the model’s output to be within 500 words, it’s safe to set the max_output_tokens value to 1000. The maximum value supported by the model is 1024. If we didn’t include this parameter, the value would default to 64.

The next parameter, temperature, specifies the creativity of the model. This setting controls how random the token selection will be. Lower temperatures are better for prompts that need a specific and less creative response, while higher temperatures can result in more diverse and creative answers. The temperature value can be between 0 and 1. Since we want a bit of creativity, we set this to 0.3.

Temperature too is an optional parameter, the default value for which varies by model. Two other optional parameters, top_k and top_p, allow you to change how the model selects tokens for output, but we’ll skip them here.

With the method in place, let’s construct the prompt.

prompt = f""" Write a blog post on renewable energy. Limit the number of words to 500. """

Invoke the method by passing the prompt.

response=get_completion(prompt) print(response)

IDG

IDGYou should see a well-written blog post generated by PaLM.

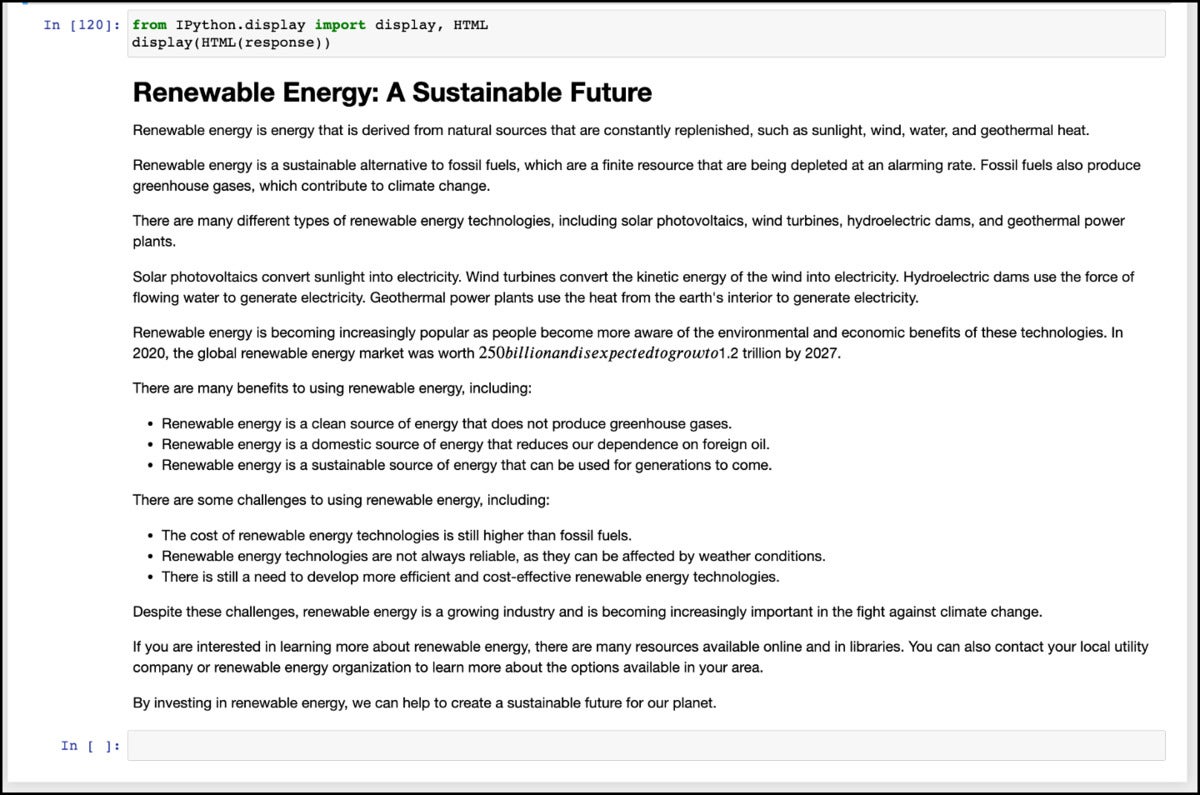

Let’s make this realistic by asking PaLM to generate the content formatted in HTML. For that, we need to modify the prompt.

prompt = f""" Write a blog post on renewable energy. Limit the number of words to 500. Format the output in HTML. """

To print the output in HTML format, we can use the built-in widgets of the Jupyter Notebook.

from IPython.display import display, HTML display(HTML(response))

You can now see the blog post formatted in HTML.

IDG

IDGBelow is the complete code for your reference.

from vertexai.preview.language_models import TextGenerationModel

model = TextGenerationModel.from_pretrained("text-bison@001")

def get_completion(prompt_text):

response = model.predict(

prompt_text,

max_output_tokens=1000,

temperature=0.3

)

return response.text

prompt = f"""

Write a blog post on renewable energy. Limit the number of words to 500.

Format the output in HTML.

"""

response=get_completion(prompt)

print(response)

from IPython.display import display, HTML

display(HTML(response))

In just a few lines of code, we have invoked the PaLM 2 LLM to generate a blog post. In upcoming tutorials, we will explore other capabilities of the model including code completion, chat, and word embeddings. Stay tuned.

Copyright © 2023 IDG Communications, Inc.