Less than a year after generative AI (genAI) made a splash with the public launch of OpenAI’s ChatGPT 3.5, Microsoft is about to make its Copilot assistant available within its ecosystem of productivity and collaboration applications. The rollout begins Nov. 1.

But while Microsoft has moved quickly to incorporate genAI into tools such as Word, Excel, and Teams, many Microsoft 365 customers are expected to take a cautious approach to deploying Copilot within their organizations. This means a focus on internal trials to identify use cases, and bolstering data security practices to mitigate the risks of connecting large language models to corporate systems.

“I would think that 2024 is a year of experimentation, as opposed to volume procurement or volume deployment,” said Matt Cain, a vice president and distinguished analyst at Gartner.

M365 customers that access Copilot will be required to procure a minimum of 300 Copilot seats, which is “still a bit of a steep hill to climb. But I think most organizations are willing to treat it as speculative capital and say, ‘Okay, let’s see if this can really do anything for us.’”

Perhaps the most pressing issue for businesses looking to deploy Copilot involves data security. Microsoft already has security controls in place as part of its Azure Cloud, but the power of large language models (LLMs) will put any data management shortcomings into sharp focus.

The trade-off: productivity vs. security concerns?

Just as the M365 Copilot can help employees access information relevant to their roles, it could equally return sensitive and confidential files that haven’t been properly categorized and protected — anything from customer or research-and-development information to HR and payroll data.

Copilot makes it a lot easier to access this information.

Microsoft

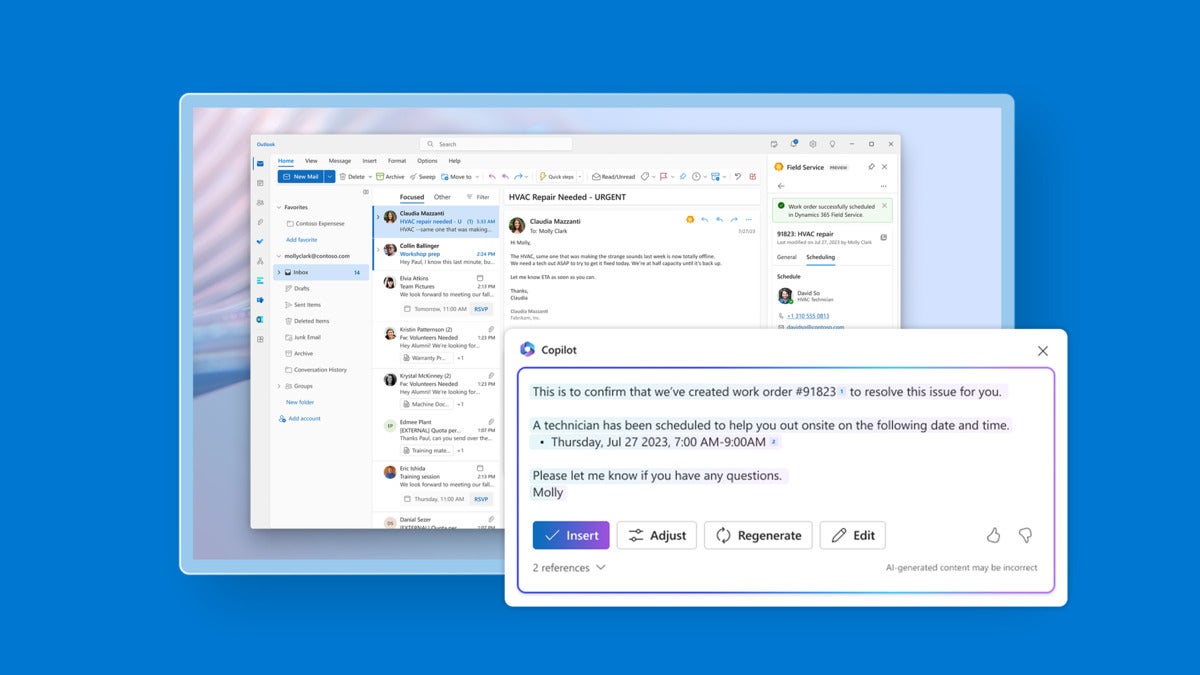

MicrosoftMicrosoft’s Copilot for the Field Service app in Outlook. (Click image to enlarge it.)

“It’s just another level,” said Rob Young, CEO of Infinity Group, a UK-based Microsoft consultancy. “All of a sudden, it’s a lot easier to surface data by asking questions. ‘How much does John Doe get paid?’ Instead of going through all of the records to find it, [the Copilot will reply] ‘John Doe, pay slip — there you go.’”

The introduction of Copilot highlights the importance of data management. “That’s the first thing that people need to focus on and get on with. It’s not to be scared of the technology, it’s just to get your foundations in place,” said Young.

Microsoft has tools that can help secure data, including its Purview Data Protection data governance tool and the ability to categorize data within SharePoint, said Young.

The reality, however, is that most businesses have significant gaps in their data management strategies, according to Matt Radolec, vice president for incident response and cloud operations at data security software vendor Varonis.

A 2022 report published by Varonis claimed that one in 10 files hosted in SaaS environments is accessible by all staff; an earlier 2019 report put that figure — including cloud and on-prem files and folders — at 22%. In many cases, this can mean organization-wide permissions are granted to thousands of sensitive files, Varonis said.

“You don’t realize how much you have access to in the average company,” said Radolec. “An assumption you could have is that people generally lock this stuff down: they do not. Things are generally open.”

In Radolec’s view, very few M365 customers have adequately addressed the risks around access to data within their organization at this stage.

“I think a lot of them are just planning to do the blocking and tackling after they get started,” he said. “We’ll see to what degree of effectiveness that is come November 1 [the M365 Copilot launch date]. We’re right around the corner from seeing how well people will fare with it.”

Trialling Copilot internally

With significant interest in the possibilities of genAI in the workplace, many M365 customers will, as Cain noted, be keen to try out the technology — even if it’s not deployed fully across their organization.

This will generally mean testing it first with smaller numbers of employees to identify where Copilot will be most effective.

French IT service provider Orange Business was one of hundreds of businesses that took part in a paid early access program (EAP) for Microsoft’s Copilot in recent months. This would help both to build experience to advise its clients on how to implement Copilot, and, potentially, pave the way for a wider deployment across Orange Business’ workforce and that of its parent company — telecom firm Orange.

With genAI largely untested in the workplace, the aim was to find out firsthand how staff wanted to use the Copilot, said Marie-Hélène Briens Ware, a vice president at Orange Business and head of the firm’s Workplace Together team.

Microsoft

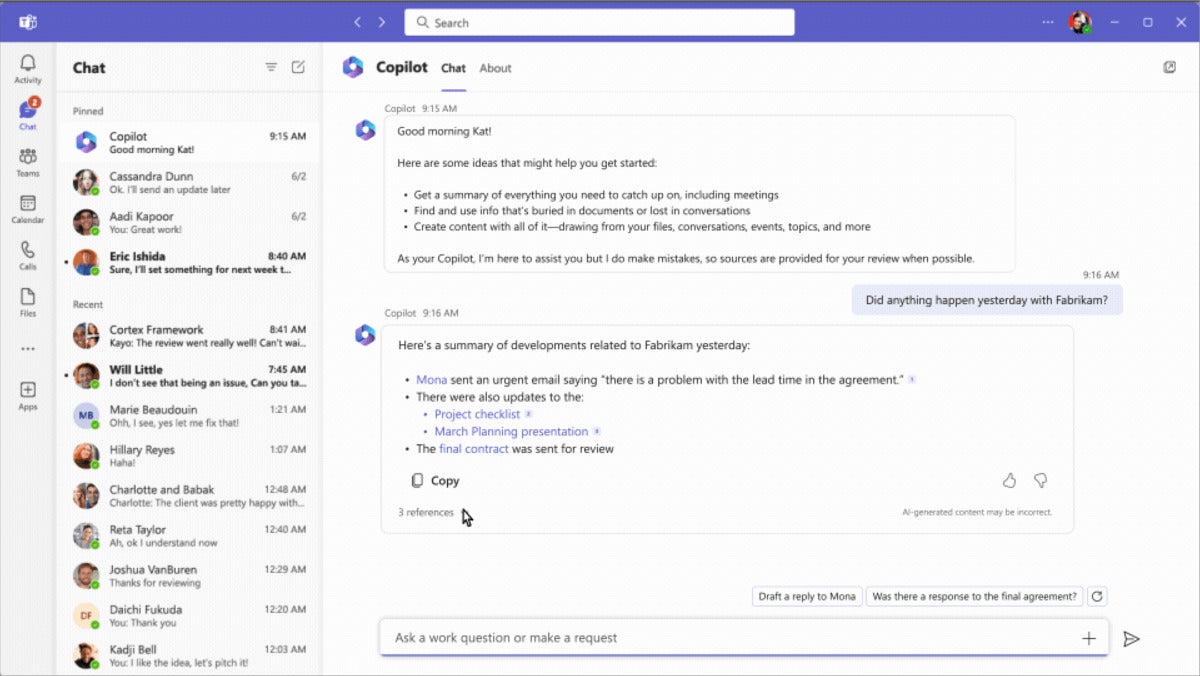

MicrosoftCopilot can aynthesize information from different sources about a project. (Click image to enlarge it.)

“The core belief we have is [that] the people who know if they can be more efficient at what they do are the people themselves,” she said.

Orange Business allocated Copilot licenses to a range of 15 job roles to see where it could be most effective, from “personal assistant to content creator to financial analyst; legal people as well,” said Briens Ware.

The company also sought diversity in terms of geographic location, and ensured that Copilot wasn’t only put in the hands of those who had a keen interest in the technology.

“We were mindful that we needed people who are incredibly enthusiastic, quite geeky, who are going to test the tool inside out and provide lots of feedback,” said Briens Ware. “That’s obviously incredibly valuable. But we also know that not everybody is going to be like this in a company, so we also wanted people who are not really geeky…. What happens when you give them that sort of tool — what they do with it, how comfortable are they with it?”

It’s an approach that was prevalent among those involved in the Copilot EAP, said Gartner’s Cain.

“You have a petri dish and you want to sprinkle your licenses as widely as possible — as many personas as possible, as many different roles as possible,” he said. “Almost every role and position and persona we’ve looked at, there are people that are getting value out of it and there are people that are not getting value out of it. So, I think it may be more personal as opposed to role-based.

“But it is early days, so maybe those patterns will emerge as we get more data.”

While many customers participating in the Copilot EAP tended to prioritize access for top performers within their organization, Gartner recommends casting a broad net.

“You would also want to include underperformers as well, because there is some evidence that generative AI can have a bigger impact on underperformers than overperformers,” said Cain.

It’s also important to get feedback from those given access to Copilot, whether they actively use it or not.

“We have a long list of questions that we would suggest: How often did you use [Copilot] and what did you use it for? Did you enjoy using it? Did it do anything odd? Did it return incorrect results? Would you recommend it to others on your team?” said Cain. “And ultimately — perhaps ironically — I think the true test of value will be, ‘Okay, you’ve had the license for a while, we’re going to shift it to someone else.’ And if that employee says, ‘No, you can’t take my license, it’s too valuable to me,’ that’s going to be the ultimate litmus test in terms of value delivered.”

Consider what success means when deploying M365 Copilot

Microsoft 365 Copilot will cost an additional $30 per user each month for those with E3 and E5 license agreements. It’s a significant outlay, and businesses may want to show a clear return on investment in terms of an uptick in productivity.

But measuring that is far from straightforward, said Briens Ware. “You can’t say that any job is going to gain ‘x’ productivity thanks to Copilot, because it depends on the organization, it depends on the culture a little bit, it depends on the data available [for the Copilot to access].”

“It’s a bit more complex than just saying, ‘If you’re a marketer, this is how much time you’re going to gain.’ I would recommend everybody to have a closer look and see what’s [in it] for them, particularly with their organization, their data, their culture, before they jump to any conclusion without trying the tool.”

One way to track the impact of Copilot is around time saved on tasks on a daily or weekly basis, such as using the AI assistant to summarize documents or meetings. But tracking hours saved can be a limited way of evaluating effectiveness, said Briens Ware. “The question is, what’s the implication: Can they spend more time doing other things? Does it mean they’re happier employees? Does it mean they can be more creative and spend more focused time on creative stuff?” she said.

“So A, I don’t think there is a good definition of productivity for knowledge workers. And B, I think it’s a multifaceted thing that has to do with, yes, a bit of productivity or the time gained to perform certain tasks, but also, does it reduce your mental workload? Does it allow you to do things you were not able to do before? Do you think you’re more creative?

“That’s also what we’re looking at during the [early access] program, understanding all the positive impacts of such tools and understanding what is a good measure of success for such a tool.”

Rather than focusing on a typical return on investment when making a business case for the Copilot, Cain suggests focusing on a “return on employee” to highlight the wider benefits to employee experience, which is correlated to employee intent to stay at their current job.

“Every employee wants to spend less time looking for information, they want an easier time writing, they want more effective meetings. These things aren’t necessarily going to show up in a rigid ROI,” said Cain.

“Activities with Copilot will be correlated with better business results, but you can’t prove causation. That’s why it’s helpful to have an alternative narrative where you talk about a ‘return on employee,’ which is: Is it improving the worker’s digital employee experience? And so we think that’s a better lens to look at how Copilot — and genAI in general — will impact the workforce.”

Copyright © 2023 IDG Communications, Inc.